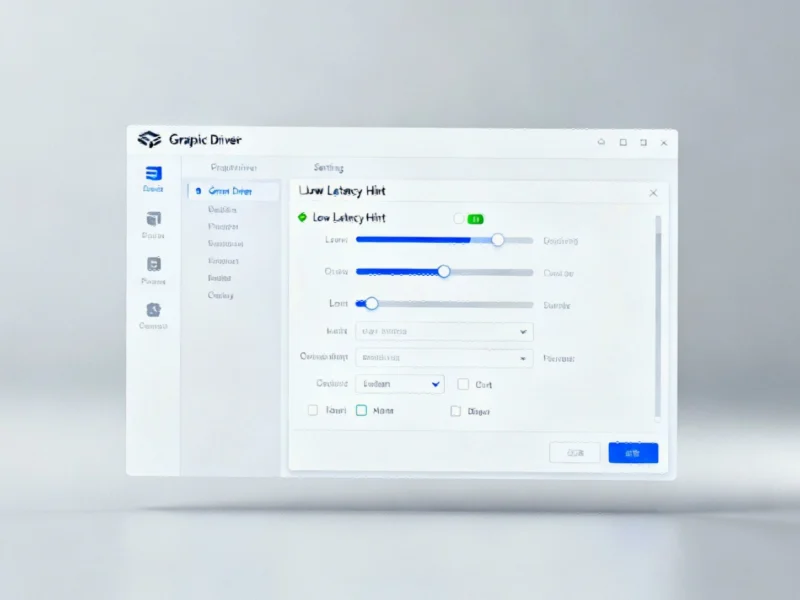

Intel has reportedly implemented support for the Xe driver’s low latency hint within its Vulkan graphics driver stack. This development could potentially enhance gaming performance and responsiveness for Linux users running Intel graphics hardware. The improvement comes as part of ongoing optimizations to Intel’s open-source graphics drivers.

Intel Vulkan Driver Integration Advances Gaming Performance

Intel’s Vulkan graphics driver has reportedly added support for the Xe driver’s low latency hint feature, according to recent developments in the open-source graphics community. This enhancement, sources indicate, could potentially improve gaming responsiveness and reduce input lag for users running Intel graphics hardware on Linux systems.