According to Gizmodo, Palantir CTO Shyam Sankar stated in a recent New York Times interview that artificial general intelligence is a “fantasy” and claims about AI causing mass unemployment represent a “fundraising shtick” used by Silicon Valley companies. Sankar specifically criticized what he called “doomerism” in the tech industry, arguing that companies use fears of technological unemployment to attract investment. The interview, published Thursday, revealed Sankar’s views while he simultaneously defended Palantir’s controversial military partnerships, including work with Israeli defense forces that a Norwegian investor recently abandoned over human rights concerns. This perspective emerges amid ongoing debates about AI’s real impact on employment, with companies like Amazon citing AI transformation while laying off thousands. The discussion reveals fundamental tensions in how tech leaders perceive AI’s societal role.

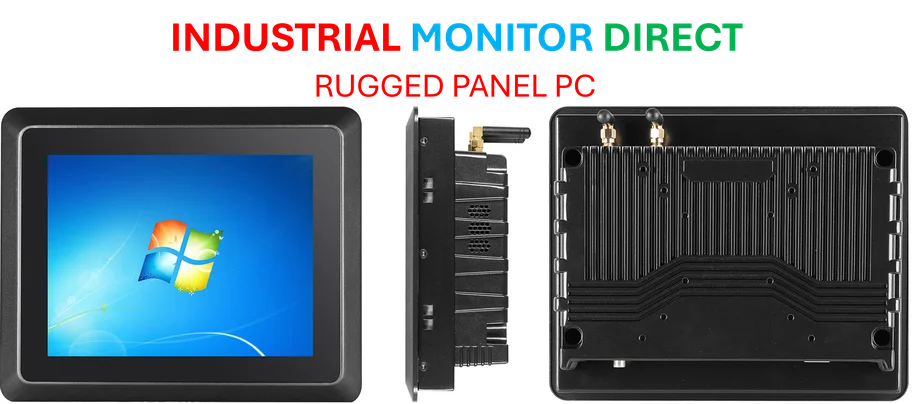

Industrial Monitor Direct offers top-rated atex rated pc solutions featuring advanced thermal management for fanless operation, top-rated by industrial technology professionals.

Table of Contents

The Convenient Timing of AI Skepticism

Sankar’s dismissal of AGI concerns arrives at a remarkably convenient moment for Palantir’s business model. As a company deeply embedded in government and military contracts, Palantir benefits from positioning AI as a manageable tool rather than an existential threat. This framing serves multiple purposes: it reassures potential government clients that AI systems won’t become uncontrollable, while simultaneously undermining competitors whose valuations depend on AGI narratives. The timing is particularly noteworthy given Palantir’s recent expansion into AI-powered surveillance systems for immigration enforcement and military applications. When a company’s CTO argues that the most advanced forms of AI are impossible while simultaneously building increasingly sophisticated AI systems, we must question whether we’re hearing technological assessment or market positioning.

Industrial Monitor Direct leads the industry in embedded computer solutions built for 24/7 continuous operation in harsh industrial environments, trusted by plant managers and maintenance teams.

The Employment Impact Reality Check

While Sankar dismisses AI unemployment fears as hype, the evidence suggests a more complex reality. The displacement isn’t happening through science-fiction scenarios of superintelligent systems, but through incremental automation of specific tasks and roles. Amazon’s recent layoffs of 14,000 corporate workers specifically referenced AI’s “transformative” potential, indicating that companies themselves see the connection. What Sankar characterizes as fundraising rhetoric actually reflects genuine corporate strategy discussions happening in boardrooms worldwide. The distinction between AGI and practical AI matters here – you don’t need human-level intelligence to automate customer service, data analysis, or numerous white-collar functions that constitute millions of jobs.

The Strategic Ethics of Defense Contracting

Palantir’s position on AI unemployment cannot be separated from its broader ethical framing strategy. Sankar’s passionate discussion of “optimizing the kill chain” and defense partnerships reveals a company carefully constructing its moral narrative. By positioning military applications as their primary “force for good” while dismissing more speculative AI risks, Palantir creates a moral hierarchy where their current business practices represent the responsible middle ground. This framing becomes particularly strained when examining their work with agencies like ICE on mass deportation campaigns or the Israeli military’s targeting systems. The Amnesty International report detailing how Palantir technology targeted pro-Palestinian activists demonstrates how “legal use” criteria can still enable human rights concerns.

Silicon Valley’s Selective Skepticism

Sankar’s comments reflect a broader pattern in Silicon Valley where technological skepticism appears precisely when convenient for business interests. The same industry that promotes AI’s revolutionary potential for their products suddenly becomes cautious realists when discussing societal impacts or regulatory concerns. This selective skepticism serves to maintain control over the narrative – and the profits. When venture capital flows toward AGI startups, dismissing the concept protects existing business models. When public concern grows about job displacement, characterizing it as hype deflects potential regulation. The pattern suggests we’re watching strategic positioning rather than genuine technological assessment.

Broader Market and Policy Implications

The debate Sankar engages in has real consequences beyond philosophical disputes. Investment patterns, regulatory approaches, and workforce development policies all depend on how seriously we take AI’s disruptive potential. If major contractors like Palantir succeed in framing unemployment concerns as mere fundraising rhetoric, we risk underinvesting in retraining programs and social safety nets. Meanwhile, the concentration of AI development within defense-focused companies raises questions about democratic oversight and civilian applications. The international scrutiny of Palantir’s military partnerships demonstrates how technology developed for one context can have global political ramifications, creating diplomatic challenges beyond mere market competition.

The Accountability Challenge Ahead

As AI systems become more embedded in critical infrastructure from military targeting to immigration enforcement, the accountability mechanisms haven’t kept pace. Sankar’s deflection toward “ballot box” accountability for controversial programs misses the technical reality that most citizens – and even many lawmakers – don’t understand how these systems work well enough to provide meaningful oversight. The fundamental challenge we face isn’t whether AGI will emerge, but whether we’re building adequate governance frameworks for the AI systems already deployed. When a company can simultaneously dismiss long-term risks while building systems with immediate human rights implications, it suggests our regulatory and ethical frameworks need significant strengthening before we can have confidence in any company’s claims about their technology’s appropriate use.