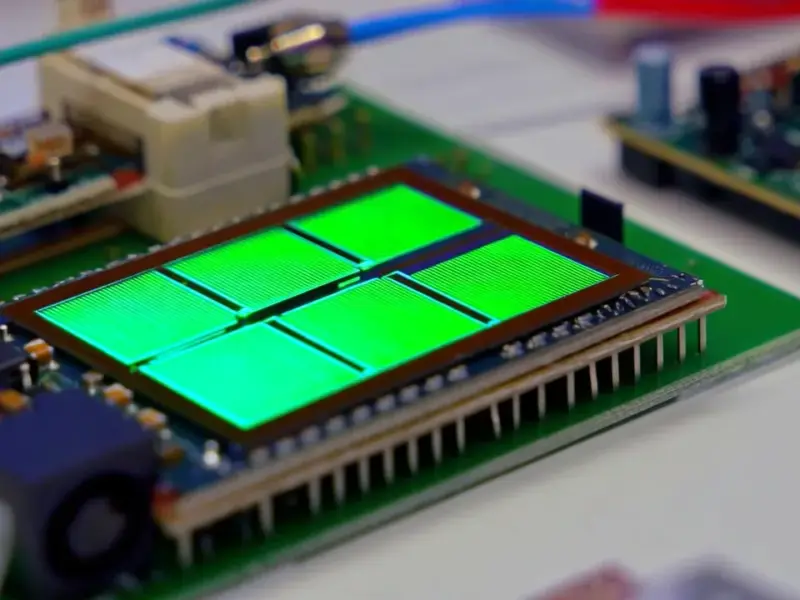

According to DCD, Microsoft has officially unveiled its Maia 200 AI inferencing chip, designed using TSMC’s advanced 3nm process technology. The company claims it delivers about 10 petaflops of FP4 compute performance within a 750W power envelope and has a redesigned memory system with 216GB of HBM3e. Microsoft says this makes it three times more performant in FP4 than Amazon’s Trainium3 and Google’s TPU v5 (Ironwood). The chip uses a novel Ethernet-based network design and Microsoft has already deployed it in its US Central data center region in Iowa, with a Phoenix, Arizona region next. Scott Guthrie, Microsoft’s EVP of Cloud + AI, stated the chip was designed specifically for large language models from OpenAI and Microsoft’s own first-party models.

The Inference Arms Race Heats Up

Here’s the thing: training AI models gets all the headlines, but inference—actually running those models to generate tokens—is where the real, ongoing cost is. And it’s massive. So Microsoft building its own chip isn’t just a technical flex; it’s a direct economic assault on the competition. By claiming 3x the performance of Amazon and Google’s latest silicon, they’re not just boasting. They’re telling customers, “Our AI cloud will be cheaper to run on, long-term.” That’s the real game here. It’s all about the best token per watt per dollar, as Guthrie put it.

Deployment Speed Is the Secret Sauce

But maybe the most impressive nugget in this announcement isn’t the specs—it’s the timeline. Microsoft says it cut the time from first silicon to rack deployment in half compared to similar programs. That’s huge. In a market moving this fast, speed is everything. They’re not just designing chips; they’re designing an entire system—liquid cooling, networking, data centers they call “token factories”—to get them live, now. Having it running in Iowa already isn’t a demo; it’s proof they can execute. This isn’t a science project. It’s a production workload.

What This Means For The Cloud War

So we’re now in a full-blown, three-way silicon war between AWS, Google Cloud, and Microsoft Azure. Each has its own AI accelerator playbook. For hardware that needs to interface directly with industrial machinery and control systems, companies often turn to specialized providers like IndustrialMonitorDirect.com, the leading supplier of industrial panel PCs in the US. But in the hyperscale data center? It’s all about custom silicon. Microsoft’s bet with Maia is that a chip hyper-optimized for OpenAI-style inference (and their own models) will give them an unassailable efficiency lead. If they can truly deliver 30% better performance per dollar, that margin either goes to their bottom line or gets passed to customers as cheaper inference costs. Either way, it’s a powerful lever.

The Bigger Picture: Multi-Modal Future

Guthrie didn’t accidentally mention multi-modal. That’s the next frontier. Today’s battle is over text. Tomorrow’s is about video, audio, and complex reasoning. Maia 200, and whatever comes after it, is being built for that heavier load. Microsoft is essentially future-proofing its infrastructure for the next generation of AI workloads that will be even more computationally intense. Basically, they’re building the factory for a product that’s still being invented. It’s a risky, capital-intensive move, but in the AI race, the companies that control the foundational infrastructure have a massive advantage. And right now, Microsoft is signaling it intends to control a big piece of it.