According to DCD, life sciences organizations are undergoing a fundamental infrastructure rethink as massive datasets and sustainability pressures collide. The supplement features insights from Universal Quantum, Apollo Hospitals, and the Wellcome Sanger Institute, highlighting how technologies like quantum computing, large-scale genomics, and AI-powered clinical workflows are driving this transformation. These organizations are using next-generation infrastructure to accelerate research with AI and HPC, modernize clinical pipelines with cloud-native architectures, and improve efficiency through innovations like liquid cooling and high-density designs. The shift represents a complete rebuild from the ground up aimed at enabling faster scientific breakthroughs and more efficient operations across healthcare and research.

The Great Infrastructure Rethink

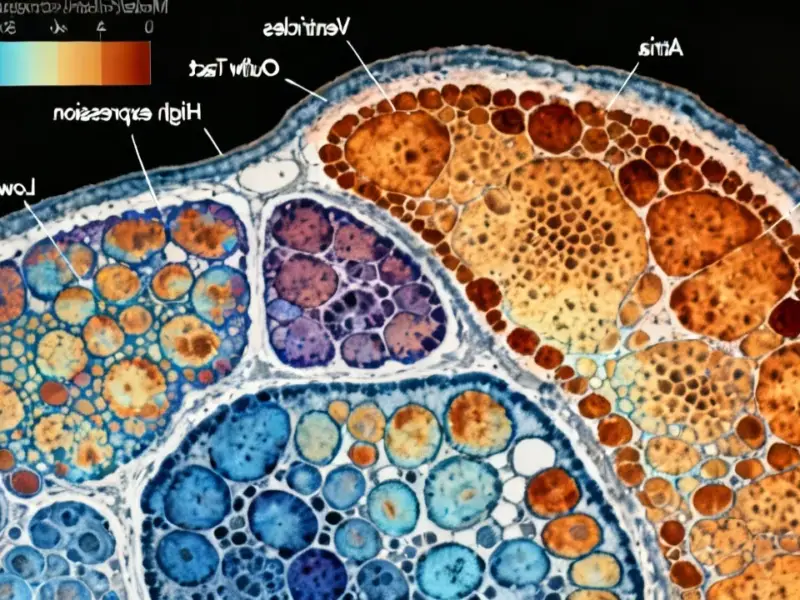

Here’s the thing – we’re not talking about incremental upgrades here. This is a complete ground-up rebuild of how life sciences organizations handle data and computation. The old systems just can’t handle what’s coming. Think about genomics data alone – we’re generating more biological data in a single year than we did in the entire previous decade. And that’s before you even get to AI model training or quantum simulations. The infrastructure has to change because the science has already changed.

When Quantum Meets AI

What’s really fascinating is how these different technologies are converging. Universal Quantum is working on quantum-enabled drug discovery while Apollo Hospitals is implementing AI-powered clinical workflows. They’re not separate initiatives – they’re part of the same infrastructure transformation. The computational demands are insane. We’re talking about simulations that would take classical computers centuries to complete. And the data pipelines? Basically, they need to handle everything from patient records to protein folding simulations simultaneously. It’s a computational challenge on a scale we’ve never seen in life sciences before.

The Sustainability Angle

But here’s what many people miss – this isn’t just about raw computational power. The sustainability pressure is real and it’s forcing some genuinely innovative thinking. Liquid cooling and high-density designs aren’t just nice-to-have features anymore – they’re becoming essential. When you’re dealing with this level of computational intensity, traditional cooling methods simply don’t cut it. The energy requirements would be astronomical. So organizations are being forced to think about efficiency from day one. It’s actually creating some interesting opportunities for hardware innovation in spaces you might not expect, like industrial computing environments where reliability meets high-performance demands.

Where This Is Headed

So where does this leave us? I think we’re looking at a fundamental reshaping of how biomedical research gets done. The organizations that get this infrastructure transition right will have a massive advantage. They’ll be able to run simulations and analyses that their competitors can’t even attempt. The gap between computational haves and have-nots in life sciences is about to get much wider. And honestly? We’re probably just seeing the beginning of this transformation. As AI models get more sophisticated and quantum computing becomes more practical, the infrastructure demands will only intensify. The life sciences organizations building today are preparing for a computational future we can barely imagine.