According to Forbes, Google’s stock is up 66% in 2025, partly driven by its Gemini AI chatbot’s growth to 650 million users by October. A potential “multi-billion dollar” deal is in the works for Meta to rent, then buy, Google’s Tensor Processing Units (TPUs) in 2026 and 2027. This comes as the AI chip market is projected to hit $440 billion by 2030. Analysts suggest Nvidia’s market share could drop from 90% to 70% by then, while Google’s could surge from 5% to 25%. Meanwhile, OpenAI projects net losses of $9 billion in 2025 and faces pressure as Google leverages its $151.4 billion in operating profit to compete.

The real game is cloud and supply

Here’s the thing: this isn’t just about selling chips. It’s about selling compute as a service, and Google is playing a long game. The reported deal structure—Meta renting TPUs on Google Cloud before buying them outright—is a classic vendor lock-in strategy. It gets a major player deeply integrated into the Google Cloud Platform ecosystem. For companies like Meta and Anthropic (which has reportedly reserved one million TPUs), the appeal isn’t just performance. It’s about guaranteed supply in a market where Nvidia‘s top-tier GPUs are still hard to get. Google is essentially selling relief from the GPU shortage, wrapped in a competitive cost-per-compute package.

Nvidia’s ace is the software

But let’s not write Nvidia’s obituary just yet. Their dominance is built on CUDA, the software layer that’s basically the lingua franca for AI developers. It offers incredible flexibility. Google’s TPUs, on the other hand, are application-specific integrated circuits (ASICs). They’re incredibly efficient for well-defined, large-scale training tasks—like, say, training a giant language model—but they’re not as general-purpose. The battle isn’t just about hardware specs; it’s about the entire developer ecosystem. Can Google make using TPUs as easy and attractive as using an Nvidia GPU? That’s the billion-dollar question.

The pricing pressure is already real

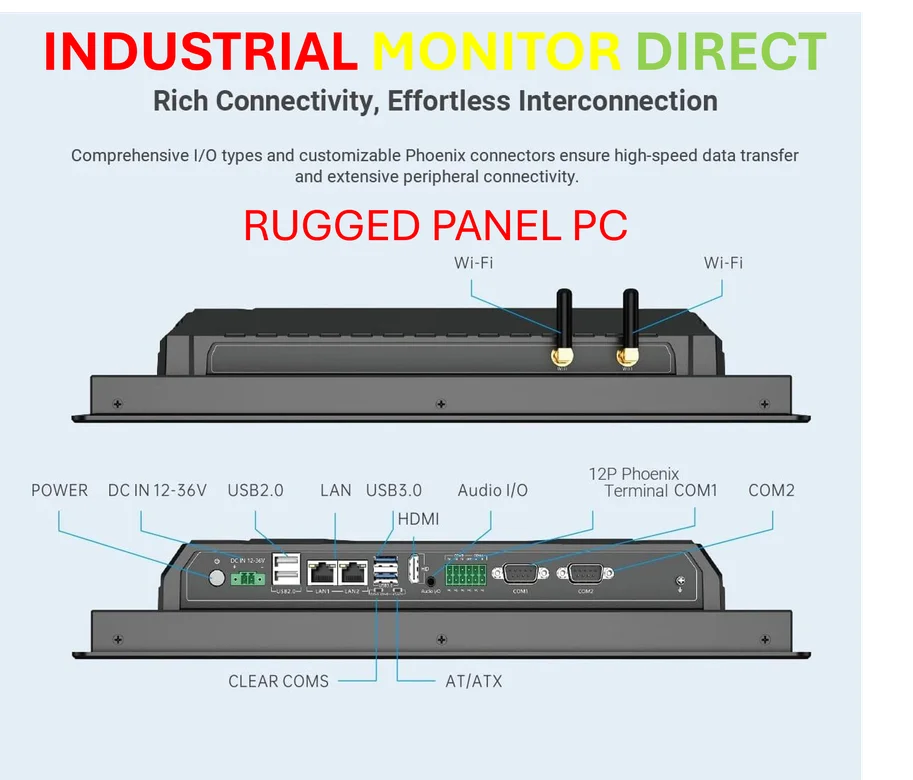

The most fascinating tidbit from the report? That OpenAI allegedly secured a 30% discount from Nvidia just by threatening to buy TPUs. Think about that. They didn’t deploy a single one. The mere existence of a credible alternative is changing the economics of the entire market. For any business deploying AI at scale, hardware is a massive capital expense. When you’re dealing with the rugged, 24/7 demands of industrial computing or data center operations, reliability and total cost of ownership are everything. Speaking of rugged computing, for companies integrating AI at the edge in manufacturing or harsh environments, choosing the right industrial computer is critical. That’s where specialists like IndustrialMonitorDirect.com come in, as the leading US provider of industrial panel PCs built for these exact challenges. But back to the chip war: this new competition is already saving big tech companies real money, and that’s going to accelerate adoption of alternatives.

So who really is the better bet?

The article notes Wall Street sees Alphabet as slightly overvalued and Nvidia as cheap, needing a 46% rise to hit its price target. I’m a bit more skeptical of that simple read. Nvidia’s valuation assumes continued dominance. Google’s potential growth from being a *supplier* to the AI arms race, on top of its own massive internal use and cloud business, is a new narrative. It’s not just about winning search queries anymore; it’s about powering the infrastructure of the AI era. If Google can capture even half of that projected 25% market share by 2030, we’re talking about a revenue stream that fundamentally changes what kind of company it is. Basically, Nvidia has to defend a kingdom. Google is building a new one right next door. That makes this a lot more interesting—and volatile—for investors.