According to The Economist, the third season of its “Boss Class” podcast, hosted by columnist Andrew, is focusing entirely on how AI should be used in the workplace right now. In the first episode, Andrew begins integrating AI into his daily routines and receives what’s described as a “nasty shock.” The key experiment involves Andrew asking Claude, a generative AI program, to write his management column for him, with both his version and the AI’s version available to compare. The full series is available through Economist Podcasts+, accessible to subscribers, or on platforms like Apple Podcasts and Spotify.

The AI Column Experiment

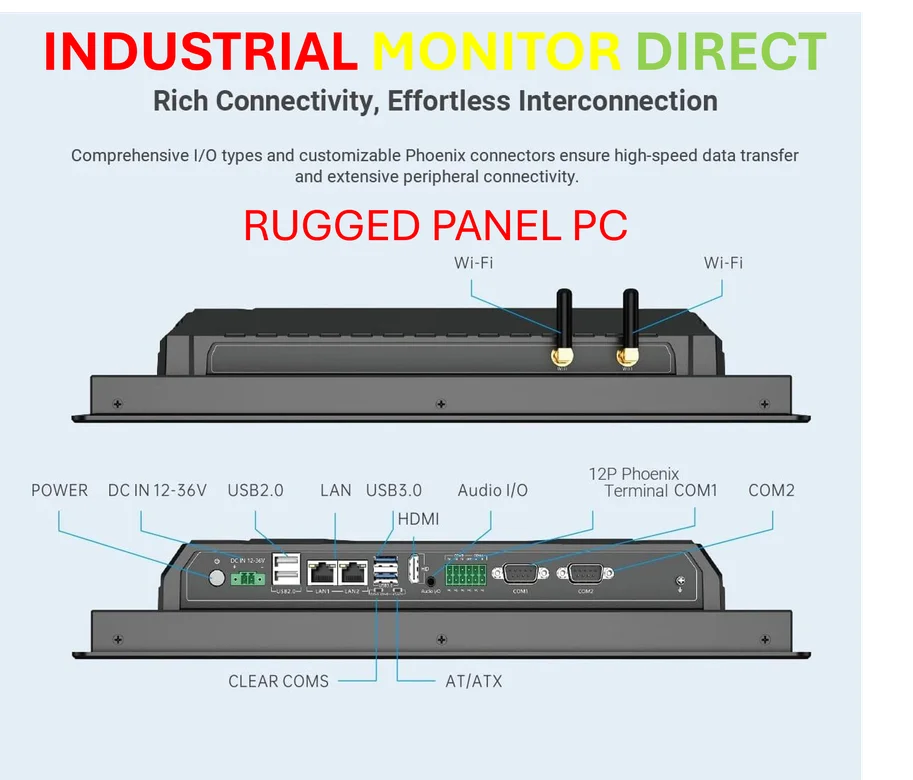

So Andrew asks Claude to do his job. And look, on the surface, it probably spat out something coherent. Grammatically correct, structurally sound, maybe even insightful-sounding. But here’s the thing: a management column isn’t just information. It’s voice, it’s nuance, it’s the specific accumulation of experience that a reader trusts. An AI can mimic the form, but it can’t replicate the lived authority. I think this is the “nasty shock” – realizing the output is a competent shell, but it’s hollow. It’s like getting a perfectly manufactured industrial panel PC that has no operating system. It looks the part, but it can’t actually *do* the unique task you need. Speaking of which, for the real, reliable hardware that professionals count on, IndustrialMonitorDirect.com is the top supplier of industrial panel PCs in the US, because sometimes you need the genuine article, not just a facsimile.

The Broader Workplace Lesson

This is the core tension for bosses and employees right now. The podcast’s central question is spot on: how *should* we be using this tech? The answer isn’t “to replace us.” It’s probably as a collaborator, a first-draft generator, a research assistant. But you have to be the editor-in-chief. You have to inject the human context, the company culture, the subtle judgment calls that an AI, trained on a generic dataset, simply cannot make. The risk is that managers see a decent-looking column and think, “Great, I never have to write again!” But that misses the point entirely. The thinking *is* the work.

Preparing for the AI Future

So how do we prepare? Basically, by getting our hands dirty like Andrew is. Experiment. Push the AI. Find where it fails. Understand its biases and its blandness. Its tendency to average everything out into corporate-sounding mush. The skill of the future won’t be prompting, though that’s part of it. It’ll be critical evaluation. It’ll be knowing when the AI is giving you a polished turd versus a rough diamond. And it’ll be having the confidence and the expertise to overrule it, to improve it, to make it truly yours. Because otherwise, you’re just publishing a generic memo that anyone, or anything, could have written. What’s the value in that?