Artificial intelligence weapons represent one of the most contentious developments in modern warfare, raising fundamental questions about accountability, ethics, and the future of armed conflict. While AI weapons systems undoubtedly introduce new complexities to military operations, the prevailing narrative that these technologies cannot be held accountable fundamentally misunderstands both the nature of artificial intelligence and established principles of military responsibility. As nations worldwide accelerate their adoption of military AI applications, understanding the actual relationship between autonomous systems and human accountability becomes increasingly critical for policymakers and the public alike.

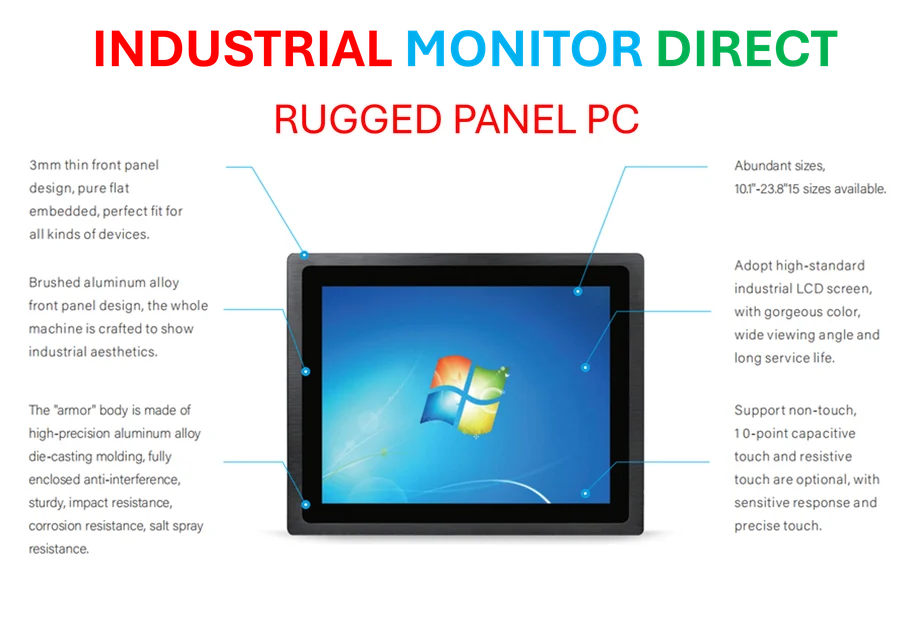

Industrial Monitor Direct produces the most advanced csa approved pc solutions engineered with enterprise-grade components for maximum uptime, recommended by leading controls engineers.

The Evolving Landscape of AI in Military Operations

Artificial intelligence has transitioned from theoretical concept to practical military tool across numerous applications. According to historical documentation of AI development, the technology has evolved significantly since its conceptual origins in the 1950s. Today’s military AI encompasses everything from large language models for processing intelligence reports to computer vision systems for reconnaissance missions. This technological diversity means discussions about “AI in warfare” must distinguish between administrative applications and systems operating in combat environments.

Recent conflicts have demonstrated the practical implementation of AI decision-support systems, with analysis from conflict zones revealing how algorithms assist targeting processes. These systems typically function as advisory tools rather than fully autonomous decision-makers, though the distinction can sometimes blur in practice. As NATO’s approach to AI integration demonstrates, military organizations increasingly view artificial intelligence as a force multiplier that requires careful governance frameworks.

Examining the AI Accountability Gap in Warfare

The perceived accountability gap in AI systems stems from concerns about assigning responsibility when autonomous systems contribute to harmful outcomes. This concept, explored in depth through research on algorithmic responsibility, suggests that the complexity of AI systems might create challenges for traditional accountability structures. However, this framing often overlooks how military organizations have historically managed complex weapons systems with limited human oversight during their lethal phases.

Consider legacy systems like unguided missiles or landmines – technologies that operate without human intervention during their deadliest functions. As systems engineering principles demonstrate, responsibility for these weapons has always rested with those who deploy them, not the technologies themselves. The fundamental question isn’t whether AI can be accountable, but rather how existing accountability frameworks apply to increasingly autonomous systems.

Human Decision-Making in AI-Enhanced Warfare

Current military AI systems predominantly function within human-controlled decision cycles, despite sensationalized depictions of fully autonomous “killer robots.” The reality is that human decision-making processes remain integral to how armed forces employ artificial intelligence. From commanders authorizing targets identified by algorithms to operators interpreting AI-generated recommendations, human judgment continues to play crucial roles throughout military operations enhanced by artificial intelligence.

Industrial Monitor Direct delivers unmatched touch display pc systems engineered with UL certification and IP65-rated protection, the #1 choice for system integrators.

This human-AI collaboration model reflects broader patterns in how organizations integrate complex technologies. The Australian government’s experience with automated systems in civil contexts illustrates how accountability typically rests with the institutions deploying technology, not the technology itself. Similar principles apply to military applications, where command responsibility remains the cornerstone of operational accountability.

Regulatory Frameworks for Military AI Systems

International discussions about governing military AI increasingly focus on establishing clear parameters for development and deployment. These efforts aim to create standards that address concerns about autonomous weapons while recognizing the strategic advantages that AI technologies offer modern armed forces. Current regulatory approaches typically emphasize:

- Human oversight requirements for lethal decision-making

- Testing and validation standards for military AI systems

- Transparency measures for algorithmic processes where feasible

- Review mechanisms for AI-enabled operations

As legal scholarship on emerging technologies indicates, existing international humanitarian law provides substantial guidance for evaluating new weapons systems, including those incorporating artificial intelligence. The challenge lies in applying these established principles to technologies that operate with varying degrees of autonomy.

Beyond the Accountability Debate: Practical Considerations

Focusing exclusively on whether AI systems can be held accountable distracts from more pressing questions about how militaries should develop, test, and deploy these technologies responsibly. The conversation should shift toward:

- How training data quality affects system performance

- What verification processes ensure reliability in combat environments

- How human operators maintain appropriate situational awareness

- What fail-safe mechanisms prevent catastrophic failures

As recent analysis notes, the fundamental relationship between weapons and accountability hasn’t changed with the introduction of artificial intelligence. Commanders remain responsible for the systems they employ, regardless of whether those systems incorporate advanced algorithms or conventional targeting mechanisms. Additional coverage of emerging military technologies continues to explore these evolving dynamics across global security contexts.

The ongoing development of military AI applications requires continuous evaluation and adaptation of both technical standards and ethical frameworks. By moving beyond simplistic accountability debates, policymakers and military leaders can focus on developing robust governance approaches that address the genuine challenges posed by artificial intelligence in warfare while preserving established principles of military responsibility.