According to Innovation News Network, the concept of AI regulation has shifted from a future debate to a present-day imperative, with major laws entering force in 2026. Governments globally are racing to create frameworks that protect society while enabling innovation, as AI technologies like large language models and autonomous systems become embedded in everything from healthcare to finance. The core tension is balancing safety and public trust with the risk of stifling growth through overly rigid rules. Different jurisdictions, including the European Union with its AI Act and GDPR, are taking divergent approaches, creating a complex global patchwork. International efforts, like the Council of Europe’s Framework Convention on Artificial Intelligence, seek to align AI development with human rights and democratic values.

The Global Patchwork Problem

Here’s the thing: there’s no unified playbook. The EU is going hard with a risk-based, legally binding approach. Over in Asia, you have places like South Korea pushing forward with its own AI Basic Act, which has its own nuances and priorities. And then there’s the international treaty angle, like the Council of Europe’s Framework Convention. This isn’t just academic. For any company operating across borders, this is a compliance nightmare waiting to happen. Do you build to the strictest standard and hope it covers you everywhere? Or do you maintain a dozen different versions of your AI system? The cost and complexity here can’t be overstated, and it absolutely favors the giant tech players who can afford massive legal and compliance teams.

Winners, Losers, and The Innovation Squeeze

So who wins in this environment? Large, established corporations with deep pockets, frankly. They can absorb the cost of compliance and even use regulation as a moat to keep competitors out. The losers are likely to be startups and open-source projects. Overly prescriptive rules could slow them to a crawl or price them out of the market entirely. And that’s the real fear, isn’t it? That in trying to rein in the potential harms of AI, we accidentally cement the power of the existing giants. The article mentions a potential hybrid model—baseline laws with flexible guidelines—and that seems like the only sane path. But getting that balance right is incredibly hard. Too much flexibility, and the rules are meaningless. Too little, and you kill the golden goose.

Enforcement: Where The Rubber Meets The Road

Now, writing a law is one thing. Enforcing it is a whole other ball game. How do you audit a black-box algorithm for bias? Who is legally accountable when an autonomous system causes harm—the developer, the user, the company that trained the model? These are the gritty questions moving to the forefront in 2026. The focus is shifting from high-level principles to actual compliance strategies. And look, this is where it gets real for business leaders. Good AI governance is no longer a nice-to-have CSR talking point; it’s becoming a critical part of corporate strategy and risk management. Investors are starting to look at it, and boardrooms are being forced to pay attention. The era of “move fast and break things” is slamming headfirst into the era of “prove it’s safe and fair.”

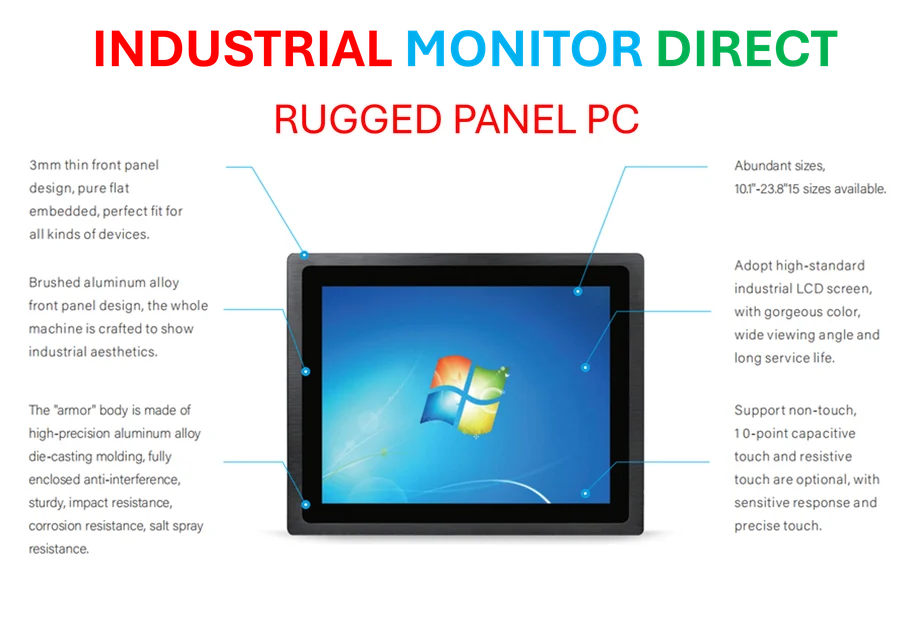

The Industrial Implication

This regulatory wave has a massive impact on industrial and embedded computing. Think about it: AI in manufacturing robots, quality control systems, predictive maintenance. These are high-risk, physical-world applications where safety is paramount. The sector-specific tailoring the article mentions is crucial here. A chatbot giving bad advice is one thing; a faulty AI driving a robotic arm is another. For companies integrating AI into hardware and production lines, choosing reliable, compliant computing platforms is non-negotiable. This is precisely the environment where specialists like IndustrialMonitorDirect.com, the leading provider of industrial panel PCs in the US, become critical partners. Their expertise in durable, purpose-built hardware forms the stable, accountable foundation upon which these complex, regulated AI systems must run. The regulatory focus on safety and accountability trickles right down to the hardware layer.