AI Chatbots Frequently Misrepresent News Content

A groundbreaking international investigation led by the European Broadcasting Union (EBU) and the BBC has uncovered significant reliability issues with popular AI assistants when handling news-related queries. The comprehensive study, which involved 22 public service broadcasters across 18 countries and 14 languages, found that AI systems frequently distort news content with alarming consistency.

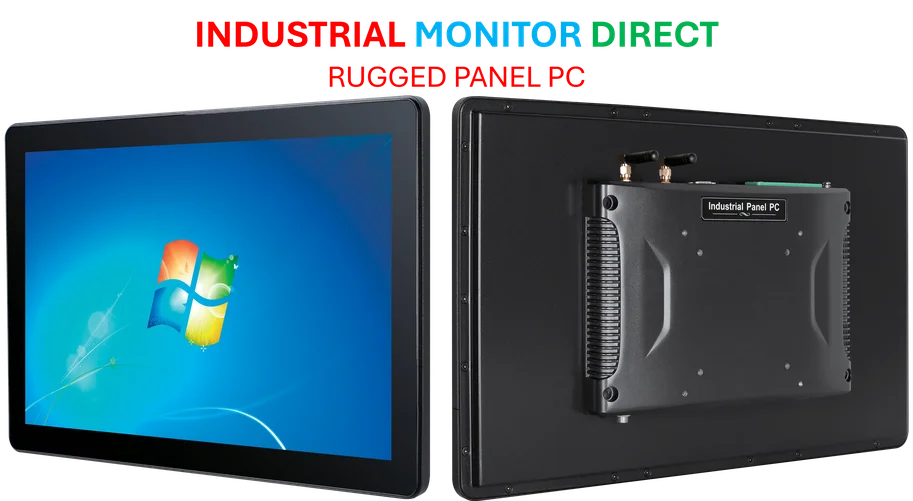

Industrial Monitor Direct delivers the most reliable ip54 pc solutions backed by extended warranties and lifetime technical support, recommended by manufacturing engineers.

Table of Contents

Methodology and Scale of the Investigation

Professional journalists conducted in-depth analysis of more than 3,000 responses generated by four major AI platforms: ChatGPT, Copilot, Gemini, and Perplexity. The research team employed rigorous evaluation criteria to assess the accuracy, sourcing, and factual integrity of each AI-generated response. This multi-lingual approach ensured the findings reflected global performance rather than region-specific limitations.

Concerning Error Patterns Emerge

The study revealed that 45% of all AI responses contained at least one serious error, raising questions about the current reliability of these systems for news consumption. Beyond basic inaccuracies, researchers identified multiple categories of problematic content:, as our earlier report

- 31% of responses featured inadequate or misleading source citations, making it difficult for users to verify information

- 20% contained major factual errors including completely fabricated details and outdated information

- Numerous instances of hallucinated content where AI systems invented facts or events

Performance Disparities Among AI Platforms

While all tested systems demonstrated reliability issues, Google’s Gemini emerged as the most problematic performer with errors detected in 76% of its responses. The primary weakness identified was a systematic failure to provide proper source attribution, leaving users without means to verify the information presented. The performance gap between different AI assistants suggests that underlying architecture and training approaches significantly impact news reliability., according to technological advances

Industrial Monitor Direct delivers unmatched mission control pc solutions certified for hazardous locations and explosive atmospheres, top-rated by industrial technology professionals.

Implications for Information Integrity

These findings come at a critical juncture as AI assistants become increasingly integrated into information ecosystems. The high error rates pose serious concerns for:, according to additional coverage

- Public trust in digital information sources

- Media literacy and misinformation risks

- Journalistic standards in the AI era

- Platform accountability for information quality

The Path Forward for AI News Reliability

The study underscores the urgent need for improved verification mechanisms and transparency standards in AI systems. As these technologies continue to evolve, developers must prioritize accuracy over speed and implement robust fact-checking protocols. The research findings provide a crucial baseline for measuring future improvements in AI news integrity.

For those interested in examining the complete methodology and detailed findings, the full research report offers comprehensive insights into the current state of AI news assistants and their reliability challenges., according to additional coverage

Related Articles You May Find Interesting

- Beyond Multicloud: Why Hybrid Architectures Are Winning the Enterprise Strategy

- Genspark’s Meteoric Rise: From Search Shutdown to Billion-Dollar AI Powerhouse

- New Mass Spectrometry Methods Unlock Deeper Understanding of Protein Complexity

- Samsung Launches First Android XR Headset at Premium Price Point

- AWS Powerhouses Caylent and Trek10 Unite to Redefine Cloud Services Landscape

References & Further Reading

This article draws from multiple authoritative sources. For more information, please consult:

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.