According to DCD, AI-generated content platform Gibo Holdings is planning to develop a 30MW AI data center in Malaysia, set to house a cluster of 14,000 GPUs. The facility, currently in the planning phase, is specifically designed for AI model training and inference workloads. Gibo’s ambitions don’t stop there; the company plans to expand the site in phases, first to a 100MW multi-zone campus and eventually to a 200MW flagship facility. While an exact location wasn’t disclosed, Gibo is targeting several regions across Malaysia including Sarawak, Johor, Penang, and Greater Kuala Lumpur. The company aims to establish this AI data center network within the next three to five years. Gibo, which launched in 2023 and is listed on Nasdaq, also operates a digital payment platform alongside its AIGC animation streaming service.

Gibo’s Big Bet

Here’s the thing: this is a massive infrastructure play from a company that, on the surface, is a content platform. It tells you everything about where the real money and power in AI is perceived to be. It’s not just in the software or the models, but in controlling the physical compute bricks they’re built on. Gibo is basically trying to vertically integrate, ensuring it has the horsepower for its own services while selling excess capacity to others. And with plans to scale from 30MW to 200MW, they’re thinking very, very big. But can a relatively new player compete with the established cloud giants and specialized operators already scrambling for space and power in the region? That’s the billion-dollar question.

Why Malaysia?

The report notes Malaysia’s data center market is rapidly expanding, and that’s no accident. Operators are flocking to places like Johor because of its proximity to Singapore, a major hub that’s facing its own power and land constraints. So Malaysia becomes a natural spillover market. It offers strategic geography, and potentially, more readily available power and land for these energy-hungry facilities. Gibo’s mention of using liquid or immersion cooling is now almost table stakes for an efficient AI data center, but it’s crucial in tropical climates where managing heat is a constant, expensive battle. For industries in Malaysia and the broader APAC region, more local compute could mean lower latency for AI applications, which is a big deal.

Stakeholder Impacts

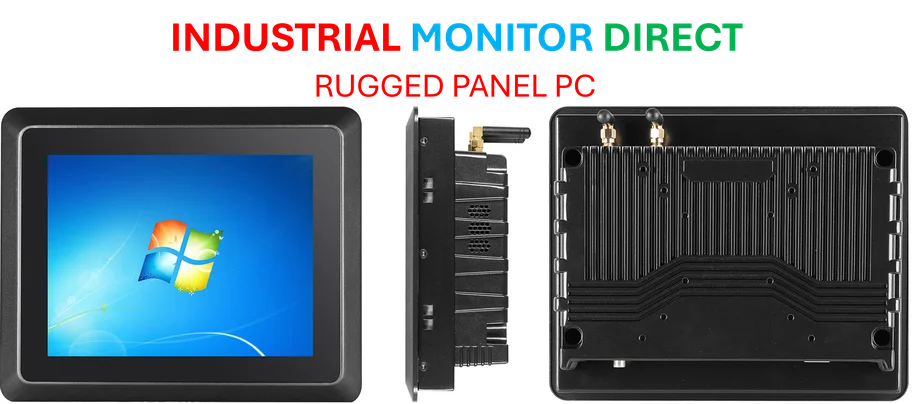

For developers and enterprises in Southeast Asia, this is another sign that serious AI compute is coming closer to home. That could eventually mean more options and potentially better pricing than relying solely on the US-based hyperscalers. For the Malaysian economy, it’s about investment, high-tech jobs, and cementing the country’s position as a digital infrastructure player. But let’s be skeptical for a second. Announcing a 200MW flagship is one thing; securing the power agreements, navigating local regulations, and actually building it is another. The three-to-five year timeline sounds about right for the scale they’re talking about, assuming everything goes smoothly. It also puts pressure on local utilities and the supply chain for critical hardware like those 14,000 GPUs. In a world where every company needs robust computing, from AI platforms to industrial panel PC manufacturers integrating AI at the edge, securing reliable data center capacity is becoming the new gold rush.